MongoDB Decay Over Time: How Deletes and Updates Clog Your Database

MongoDB is widely recognized for its flexibility, scalability, and ease of use. However, like many databases, MongoDB’s performance can deteriorate over time, especially in environments with frequent delete and update operations. Understanding the root cause of this issue is crucial to maintaining optimal performance.

Real-World Scenario: A Story of Gradual Decay

I once managed a system that had over 20 collections in MongoDB. Out of those, two collections were dedicated to capturing various events, logging millions of records daily. Each event would frequently receive updates like status changes, updated timestamps, author details, and update notes. These events were retained for 80-90 days as part of feature requirement. To manage data volume, I set up a nightly cron job to purge data older than 90 days. This setup initially felt smooth, self-managed, and highly performant.

However, as months turned into years, I noticed that something was off. Despite having the same dataset size, workload, and VM hardware, query performance began to degrade. Queries that once responded in milliseconds started taking 1-5 seconds during peak loads and even during normal usage, they still took seconds to complete.

Nothing in the surrounding environment had changed. So what was causing this mysterious performance decay?

Sherlock mode ON 🕵️♂️

The investigation of the mysterious performance decay began with checking the indexes. I cross-checked if I was using the indexes properly, if my queries could be optimized, and if I had missed any necessary indexes.

Investigation Report:

- Am I using the indexes properly? ✅ Yes

- Can my queries be further optimized? ❌ No - they were already optimized

- Did I miss any required indexes? ❌ No

So what now? Time to conduct a second-level investigation.

I checked the collection size, records, document size, and index size to find a clue. Everything looked normal except one!

The total index size (sum of all indexes) was higher than the actual document size. Surprised? I was too. I had no clue what was happening. Perhaps it was time to revisit MongoDB’s inner workings.

Okay, so let’s Revisit the Tech

Well MongoDB’s official documentation says: “The WiredTiger storage engine maintains lists of empty records in data files as it deletes documents. This space can be reused by WiredTiger, but will not be returned to the operating system unless under very specific circumstances.”

Reference: https://www.mongodb.com/docs/manual/faq/storage/#how-do-i-reclaim-disk-space-in-wiredtiger-

In simple terms, MongoDB stores data in flexible-sized blocks within its storage engine (commonly WiredTiger). When data is deleted or updated, MongoDB doesn’t immediately reclaim the empty space. Instead, this space becomes fragmented, causing performance degradation over time. Here’s how:

- Deleted Records Leave Gaps: When a document is deleted, MongoDB marks that space as available. However, unless new data fits precisely into the same space, gaps remain unused, contributing to storage bloat.

- Updates Can Inflate Document Size: In MongoDB, when an updated document exceeds its original allocated space, the document is relocated to a new block. The old block becomes partially or fully unused, adding to fragmentation.

- Index Fragmentation: Frequent updates can cause index structures to become unbalanced, slowing down query performance.

Symptoms of MongoDB Performance Decay

- Increased Storage Usage: Despite regular data deletions, disk space consumption continues to grow. Well, MongoDB already said “it does not return the disk space to the Operating System unless extreme conditions”.

- Slower Queries: Fragmented data leads to inefficient disk I/O, slowing down read operations. As the data is now here and there, it’s quite obvious.

- High IOPS: As mentioned earlier, if the document after update cannot be fit exactly into the old space, the whole document will be moved (re-written) to a new space and deleted from older. That’s twice the operation than simple one write operation. This frequent moves of documents and index reorganizations increase I/O load.

*If you didn’t know: I/O speed depends on your hard disk specifically, how fast data can be read from or written to it. For instance, AWS EBS gp3 volumes offer a baseline performance of 3000 IOPS. Need more speed? Well, that’ll cost you extra, quite a classic business move, huh? Hehe!

Solution to Mitigate this MongoDB Performance Decay

Compact Collections Periodically:

Use the compact command to reorganize data and reclaim wasted space:

db.runCommand({ compact: 'collection_name' })

Sometimes complex problems needs only a “compact” solution 🙂 ! I feel this one is a life savior.

Bonus:

Reclaiming spaces is a huge saving in Storage Space Bills if you are using MongoAtlas 🫣 or Self-Managed MongoDB on clouds like AWS, GCP, etc.

Rebuild Indexes Regularly (maybe not a good idea, it’s deprecated ⚠️) :

Indexes may become fragmented over time. Rebuilding them improves efficiency. We can trigger rebuilding the indexes by using this command db.collection.reIndex()

⚠️ First of all, this command is deprecated in MongoDB 6.0.

⚠️ Secondly, it’s only supported in Standalone mode, not in cluster mode (like, MongoAtlas like M10, M20, etc because they are also in cluster mode).

Resharding Strategy (only for PRO players):

Consider sharding collections if data volume and update frequency are high. Distributing data evenly can mitigate fragmentation. You should be little cautious while doing sharding. If the shard key isn’t well-distributed, certain shards may become overloaded, causing performance bottlenecks. Changing the shard key later is cumbersome and may require downtime or complex data migration.

Archiving Old Data (SASI - Smart and Simple Idea):

Moving less frequently accessed data to separate collections or storage solutions reduces fragmentation in active collections. For my case, the event’s were not getting updates after 7 days, so I moved (correct terminology is ‘archived’) the 7 day old data into another collection, where it’s a single insert operation. Hence, in the archive collection, the indexes, document sizes will be extremely consistent.

However, you must make a permanent deletions cron job or use TTL (time-to-live) on document to expire. So that the this archive collection is not becoming too bulky. And you can run the `compact` command after cron job deleted from archive, to reclaim it’s space too.

Regularly Monitor Database Health:

Tools like mongostat, mongotop, and third-party solutions such as Grafana, Prometheus, or MongoDB Atlas Insights can provide real-time insights into storage usage and performance. You can also set alerts on various tools and conditions based on nature of your workload and can be vigilant if something still goes wrong.

My another two cents on Best Practices for Future-Proofing

- Design your schemas with minimal data modifications in mind.

- Use TTL (Time-To-Live) indexes for automatically expiring data to reduce manual deletions.

- Implement proper indexing strategies to ensure efficient data retrieval.

- Always revisit the technology (and maybe https://revisit.tech too) to learn something new that was either missed or has changed over time. It’s a good habbit to keep yourself updated.

Conclusion

MongoDB’s performance decay due to fragmentation is a common yet manageable challenge. By understanding the root cause and implementing proactive strategies, you can maintain MongoDB’s speed and efficiency, even in high-churn environments like stated in the above post. Regular maintenance, thoughtful data design, and active monitoring are key to overcoming this silent performance killer.

If you liked this post, or this post helped you, let me know your reaction and comments below. If you have found a mistake, or have suggestions, want to share your thoughts, hit the comment section below. If you think this post is worth a read, or can help a friend or colleague, feel free to share.

Thanks for reading till here! See you next time, next post. Do Revist.Tech again!

Releted Posts

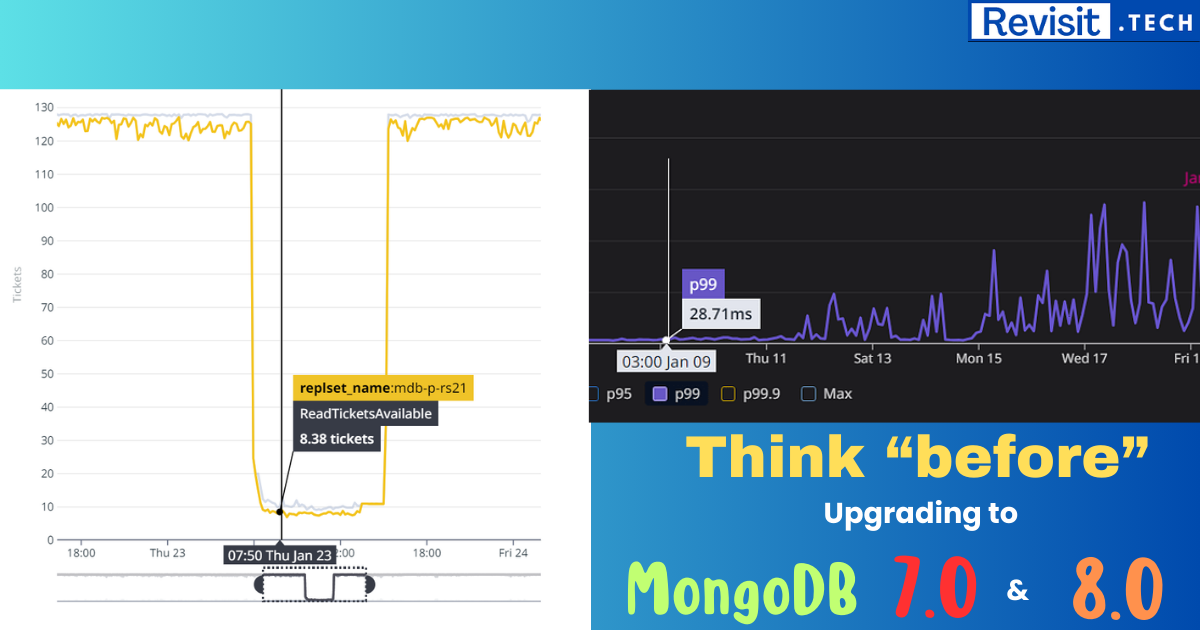

MongoDB 8.0 Performance is 36% higher, but there is a catch…

TLDR: If your app is performance critical, think twice, thrice before upgrading to MongoDB 7.0 and 8.0. Here is why…

Read moreHow Redis Helps to Increase Service Performance in NodeJS

In modern backend applications, performance optimization is crucial for handling high traffic efficiently. Typically, a backend application consists of business logic and a database.

Read more

Optimizing MongoDB Performance with Indexing Strategies

MongoDB is a popular NoSQL database that efficiently handles large volumes of unstructured data. However, as datasets grow, query performance can degrade due to increased document scans.

Read more